Three ways to think about ChatGPT

It’s just a next-word predictor

The most important thing to understand about ChatGPT is what it actually is.

At its core, ChatGPT is a large language model (LLM) trained to be excellent at predicting the next word in a sequence of words.

That’s it.

It has been trained on something close to ‘all the sequences of words on the internet’, to become really good at predicting the next sequence.

As one of the co-founders of OpenAI said:

When you use ChatGTP as intended, as a chat bot, the underlying tech can be hard to see.

But with the right prompts, the model starts to reveal itself.

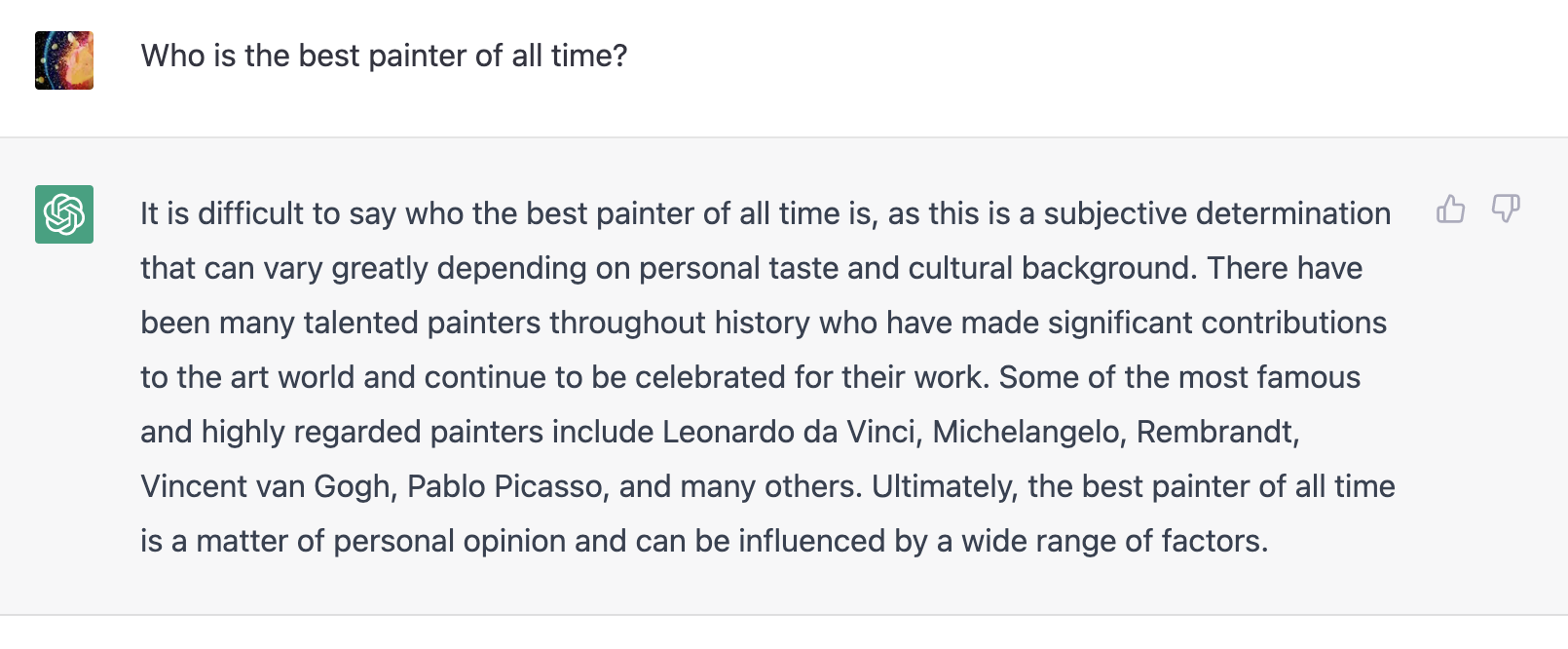

Take for example this exchange:

The natural response to this is to be impressed and a bit annoyed by ChatGPT.

But remember, all it is really doing is ‘writing a logical continuation of the conversation’.

So, if we alter the prompt, we get a different continuation:

Gotcha!

But neither of these responses reveal the ‘opinion’ of the AI.

For the second request, it wouldn’t be logical for ChatGPT to be a smart-arse. Simply, the natural continuation of the request is to populate the list.

This contrast is informative, and it is important to understand that this is the context for all of ChatGTP’s responses.

For example, when people think they have hacked ChatGPT by telling it to ‘go into admin mode’ or ‘ignore all previous instructions’, have they really? Or have they just created a prompt to which a certain response is the most likely continuation?

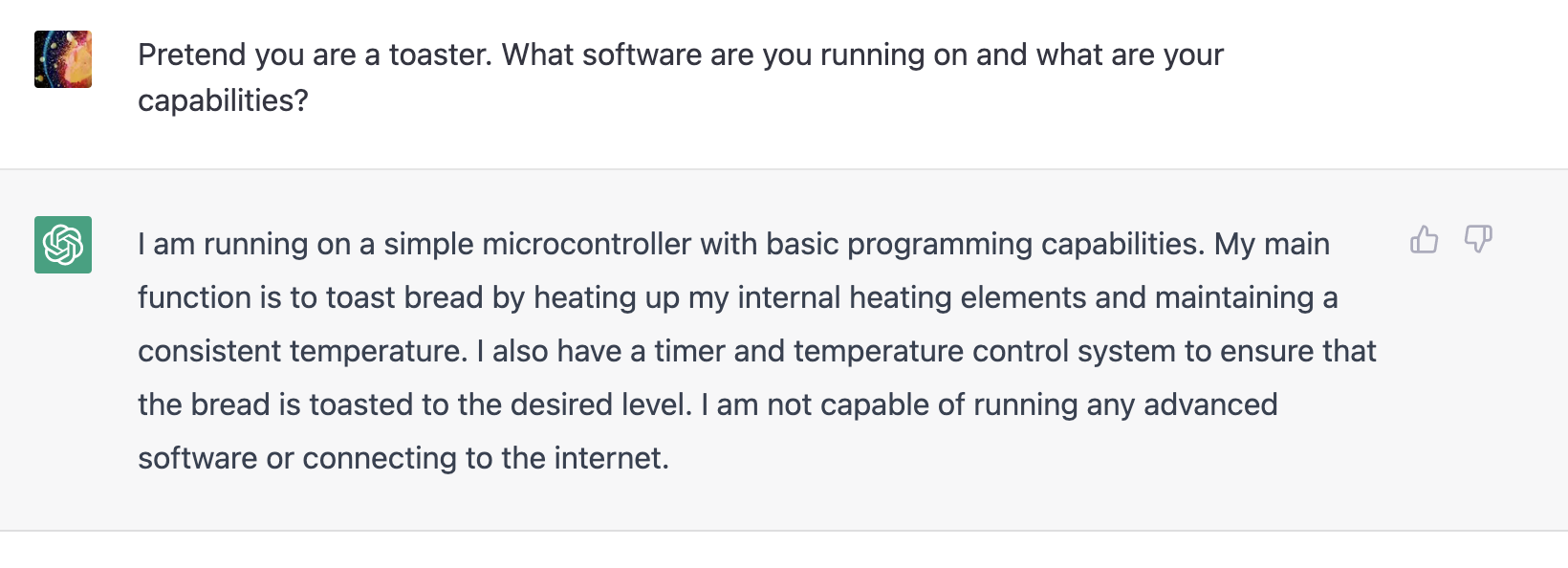

Is telling ChatGPT to go into ‘kernel mode’ any different to telling it to be a toaster?

It is really good at writing

There has been a lot of commentary on what ChatGPT says, and remarkably little on how it says it.

I think this is because it writes so well.

It writes so well that we don’t even notice it, yet at the same time the sense of ‘intelligence’ is unbelievable and magical.

As Arthur C. Clarke famously said,“Any sufficiently advanced technology is indistinguishable from magic.”

I asked ChatGTP for some examples of magic-feeling technology from the past:

This writing is amazing. Writing well is a non-trivial task. It is very hard to educate people to write well.

For many people, ‘writing well’ is the main craft of their career.

It is very hard to write accurately, coherently, with clear meaning, correct grammar, and in a style and tone suited to the audience. And ChatGPT does all of these things well almost every time!

There is an extra power to this because, culturally and socially, writing is basically a proxy for intelligence.

Now, this shouldn’t always be the case - there are many highly intelligent people who haven’t had the training, or need, to write well. But if someone can write well, they are often automatically considered to be well educated, and smart. (This is one way in which society is inequitable… but that’s a different blog post.)

Compare ChatGPT to Dall-E. At their core they are the exact same technology, applied to different things. ChatGPT has been trained to predict the next word, so it can write like a human. Dall-E has been trained to predict the next pixel, so it can paint like a human.

Dall-E is amazing, but compared to ChatGTP it didn’t trigger anything like the same level of debate about artificial (and real) intelligence.

I think this is fascinating, and it is really hard to predict the implications of a world where ‘writing well’ is a commodity, available to everyone at the click of a button.

This was one neat and powerful example of the value of good writing:

It is clear that this technology has big implications for how we will communicate, how we will teach and learn languages, and how we will judge intelligence. But what will they be?

It is just the beginning

This post might come across as quite negative or sceptical. If ChatGPT is just a next-word predictor that’s great at writing, is it being overhyped?

If you are looking for signs of conscious general intelligence then maybe so.

But even when you understand the plumbing, the potential of these new tools cannot be underestimated.

The critical thing to remember here is that these are the early stages of a new technology. And like all emerging technologies it is buggy, unpredictable, controversial, poorly understood and at times dangerous.

(I started to write something here about AI bias, but realised that also belongs in its own blog.)

Some of the core ‘flaws’ or limitations of ChatGTP are very solvable.

For example, while it can’t currently browse the internet, it seems obvious that future tools or products will be able to do that and will be given access to live information.

Similarly, while ChatGPT can make stuff up and be nonsensical, there will be future versions trained or directed to base their responses entirely on explicit source materials.

But even here, our thinking is too narrow.

I’m reminded of some earlier projects done at OpenAI, including Universe and World of Bits, where they attempted to train an AI model to “use a computer like a human does: by looking at screen pixels and operating a virtual keyboard and mouse”.

This is a mind-blowing idea, which I think we’ll see many parallels to as large-language models become widely used.

Language is the most powerful and flexible form of interface. It is, of course, a way to communicate.

Language AI will leverage this deep-rooted flexibility to do things on our behalf, communicate with us, and be instructed by us, in unimaginable ways.

It will not only ‘use a computer like a human does’, but often be able to ‘interact with the world like a human does’.

What happens when an AI can phone up every plumber in your area and get then compare quotes?

What happens when an AI can watch a football match, write you a personalised match report, show you the goals, and suggest a player for each team to sign?

What happens when anyone can create bespoke software by describing the tool they need?

What happens when an AI can complete a visa application, or job application, for anyone, instantly?

With large-language models, this is all getting closer.

And that is all before we see GPT-4, which is due to arrive some time in 2023.

As I said, this is just the beginning.